Thesis: Cubical Marching Squares Implementation

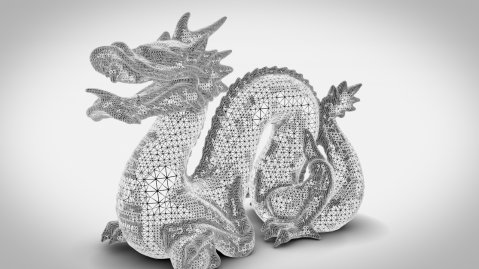

A mesh extracted at with an adaptive resolution (between 4-256) using the partial implementation of the Cubical Marching Squares algorithm, implemented for my masters thesis.

Cubical Marching Squares (CMS) (Ho et al. 2005) is an isosurface extraction algorithm proposed by a group of researchers from National Taiwan University, in 2005. It is of the “spatial sampling” type of isosurface extraction algorithms, and is based on Marching Cubes (MC) (Lorensen and Cline 1987), however claims to deal with all the problems of original MC as well as others which arise with later algorithms.

For more information on this specific implementation, please refer to THE THESIS, which was written for the project. In which I describe the methodology of my implementation in great detail.

This project began as part of my masters thesis for the MSc CAVE course @ NCCA – Bournemouth Uni. I managed to write a partial (loose) implementation of the CMS algorithm. Which results in manifold, adaptive (simplified) meshes. Some of the results which were obtained for the thesis, along with comparisons with non-adaptive MC meshes, can be seen below, (their turntables in the video).

Results

The Isosurface Extraction C++ Library

As this project resulted in a C++ library (currently having only the partial CMS implementation), I would like to make it an open source library as soon as I clean it up. This, I believe would be useful, as there is hardly any other implementation of the CMS algorithm in the public domain. Matt Keeter has a partial C implementation (very neat and well written) in the libFab library of the Kokopelli project, which is also open source. Apart from him, I have not come across any other working implementation of the CMS algorithm, in the whole internet.

The link to the code will be posted here, soon!

Future Work

A detailed Future Work section could be seen in the thesis (Chapter 6: Conclusion).

Apart from that, I hope to continue working on the library, in my spare time. After some very necessary changes and optimisations in the octree creation stage (the current bottleneck), I would be pursuing a full implementation of the CMS algorithm. A full implementation would consist of 2D and 3D sharp feature preservation, as well as face and internal disambiguation. I will keep you updated…

References

Hi, very interesting work !

Is it possible to do level of detail with this kind of representation, by merging the 8 leaf cells in their parent cell (and what about their associated faces) ?

I have tried to think about it but I find that merging the cells could generate cracks and alter the topology of the model and I do not see in this case how the information contained in the face cells can help to repair the cracks and topology.

Hi,

It really depends on what exactly you mean by LOD. If you mean simply “adaptive” (or “simplified”) meshing, then yes, it already works, and this without the need for a process called “crack-patching”, due to the way the algorithm works.

This means that some parts of the mesh will be more detailed than others. Currently, as in the original algorithm, i use 2 conditions to check whether to subdivide a given cell. They are the ‘complex-surface’ check and the ‘edge ambiguity’ check (you can read more about them in the thesis i posted – under section ‘Implementation’). Now, for LOD in the sense of terrain there could be yet another condition which checks for distance from the camera, for example.

Keep in mind that then the whole octree would have to be recalculated each time the LOD registers a change because only the leaf cells store mesh parts, which is not good especially if u want real time applications.

Therefore a better way for performing LOD and using this algorithm, I think would, involve the pre-computation of the mesh on each level (say up till level 8). And storing all the mesh details for each cell level in every cell. Then when the LOD engine denotes a change, the correct level information will be invoked from the cell and the whole octree needn’t be recomputed. I only speculate however, that this might work.

As for the cracks, the way this algorithm avoids them altogether is by taking the information from a cell of deeper subdivision (which has subcells which are leafs) and using this information in it’s neighbouring leaf-cell which is being computed currently. (It is a bit hard to explain but, the idea is rather simple, check again my thesis under section ‘Implementation’, where I try to describe what I do, better. Read about the transitional faces, as they are the ones which cause cracks if they are not handled properly.)

I apologise if I completely missed the point.

George.

Thanks for the reply,

For the adaptive part, I understood how it works, and it’s a feature that is interesting, it seems very natural in this algorithm.

> Now, for LOD in the sense of terrain there could be yet another

> condition which checks for distance from the camera, for example.

> Keep in mind that then the whole octree would have to be recalculated

> each time the LOD registers a change because only the leaf cells store

> mesh parts, which is not good especially if u want real time applications.

Yes, that was what I was thinking of.

For example, say you have 2 cells (A and B), each one of them containing 8 leaf-cells (A1 to A8 and B1 to B8). They share a common face (say “F_ab”) which is not a leaf face (the leaf faces would be F_ab1 to F_ab4)

We decide to render normally the leaf cells of A (so we render each mesh part contained in A1 to A8) which are close enough to the camera, but we want to render B which is further from the camera, without considering its children.

Is it possible to :

– Treat B as if it were a leaf cell (the problem is that the 2 conditions, edge ambiguity and complex surface, might not be verified for B).

– Trace segments on F_ab with the information we have at B level

– We already have the segments on the F_abX faces

– The problem would be to link segments that are on the F_abX faces with the ones on the F_ab face (computed from B).

That would not need to recompute the whole octree, only the cells for which we want to apply this mecanism.

I think I’ll read again your thesis so I understand fully the algorithm, and see if what I am saying is consistent.

I was aiming to implement Dual Contouring at first, but CMS really took my attention, I might give it a try.

I’d love to see this in a real time application, fully implemented on GPU 🙂

Franck

Ok, I think I get the problem you are referring to. But as I said in the last comment, in order to use a non-leaf cell as a leaf, at some point, you would have to have all those levels pre-computed as if they were leafs, and store this information somewhere. (this way the 2 conditions would be verified)

Now when we come to the point of creating the mesh from the subcells of A and B (as a leaf), then we do not need to link the segments of F_ab with those of F_abX but we simply use the lower subdivision, in this case we use the information from F_abX on that cell wall. This way they will match up with the rest of the vertices on the neighbouring cell A. This should prevent cracks.

However keep in mind the following:

My implementation of CMS is slightly different in exactly that part, from the original. Because to be honest I couldn’t quite understand theirs. That’s why I use a half-face data structure to be able to retrieve information for each face from it’s twin face (or the other side of the same face). This is how I do it, but this twin-face assignment is currently the bottleneck of the whole algorithm. So it either has to be optimised or another way has to be chosen.

DC is possible for LOD as I am sure you have seen these guys’ work:

http://www.terathon.com/voxels/

But a full CMS implementation would be very interesting.

Hello!

Do you think it is possible to do multiple materials with CMS, while still relying on an octree? I am not very experienced in this field and can’t think of a good way of doing this.

Cheers, Milan Sobota

Hi Milan,

Sorry for taking a while to reply.

I don’t know, would be the honest answer. I am not sure what you mean by multiple materials, could you please guide me to an article or paper which explains the concept, maybe then I would be able to give a more helpful answer.

Your work looks really impressive! Do you have any plans to publish the source code?

Hi Michael,

Yes, that was the original idea. I was hoping I would do it much sooner, however I didn’t have much time and had to abandon it for a few months. The main problem with publishing the code straight away is that there is still this bottleneck in the octree creation stage which i really want to get rid of. Having said that the past few days I have started gradually re-factoring the code. I cannot give promises but hopefully in the not-so-far future I will publish it, perhaps while continuing work on it. Stay tuned.

George.

Hi George,

that sounds great! You should definitely not wait too long. There are only very few implementations out there right now. You could really set new standards here! And you still can also get rid of the octree bottleneck after you publish the code 😉

Regards,

Michael

Hello George! Some months have passed – did you solve the problems you had with the code? Are you still planning to publish it? I would love to see a working example. Regards, Jörg

Hello George,

Hope you get this, so if you have a situation where if you have a limit to the depth the tree can go, yet in a cube/voxel has two crossings on the same edge @ maxTreedepth. This would normally cause the cube to be split again yet due to the limit and it does not. So when you go to trace the components or a loop it fails and it cannot create edges. I would add a picture but I think the concept is understandable. So what do you do to solve that problem?

Hi Eric,

I’m not sure if I understood the problem correctly and it’s been a while since i last looked at the code, but if you mean that there is more detail which does not come through because of the octree max limit is reached, then that’s what it’s supposed to do. That is why the values have to be tweaked for optimal results with every different function. Try increasing the octree max depth. If you think that there should be more detail preserved, but the octree does not subdivide when it should, then try tweaking the complex surface threshold. Also note that this is not a full implementation of the CMS algorithm and it doesn’t have sharp-feature preservation, so such detail might be lost.

Hope that helps,

George.

Yes you are correct, that is my issue. I did try extending the resolution like you said, meaning allowing it to go much deeper in the tree depth; but, it still gave me double crossings on a voxel edge. I’m starting to realize that even if you don’t or do have a limit, that is the condition you must resolve. Otherwise it keeps parsing till it fails or a near zero volume voxel I assume we can ignore resulting in a tiny hole but it adds a ton of new voxels that are not needed. IF we finally end the resolution at something reasonable say 8-10 deep from their paper, we have no choice but to deal with that issue ( voxel with a double crossed edge) because that is the final show stopper problem I’m consistently running into.

There does seem to be a speed issue I’m seeing as well, they publish @ table 4 -> 75,084 triangles @ .208 sec’s have no idea how they got that speed seems highly inflated especially on a Pentuim 4. And if they have a model with sharp corners the SVD function is brutal on time and I have also found that it doesn’t always solve the solution correctly.